GPT-3 vs. data-to-text – AI or text robots: If you are looking for a solution for automated content production, you will quickly come across these two approaches, especially in the current ChatGPT hype. Which process is right for your business? What are the differences? Let’s take a closer look:

Do you want to create automated content or do you want to know how text generators work at all? Then welcome to us! We explain how data-to-text and GPT-3 tools automatically generate text, how they work and where they are used.

Would you rather watch a video? Then check out our webinar on the topic:

Both GPT-3 (the technology of ChatGPT) and data-to-text (like our text robot) are so-called NLG technologies. NLG means “Natural Language Generation” and refers to the automatic generation of texts in natural language. The text is generated by a text robot, to be more precise a software.

NLG tools allow you to produce large amounts of text very quickly – up to 500,000 in an hour. Although both GPT-3 and data-to-text are NLG technologies, there are key differences.

What are GPT-3 and data-to-text and what are the differences?

Data-to-text refers to the automated production of natural language text based on structured data. Structured data are attributes that are prepared in such a way that they can be presented in tabular form. Examples of structured data are product data from a PIM system, match data from a soccer game, or weather data – in other words, they contain information that can be used in the texts.

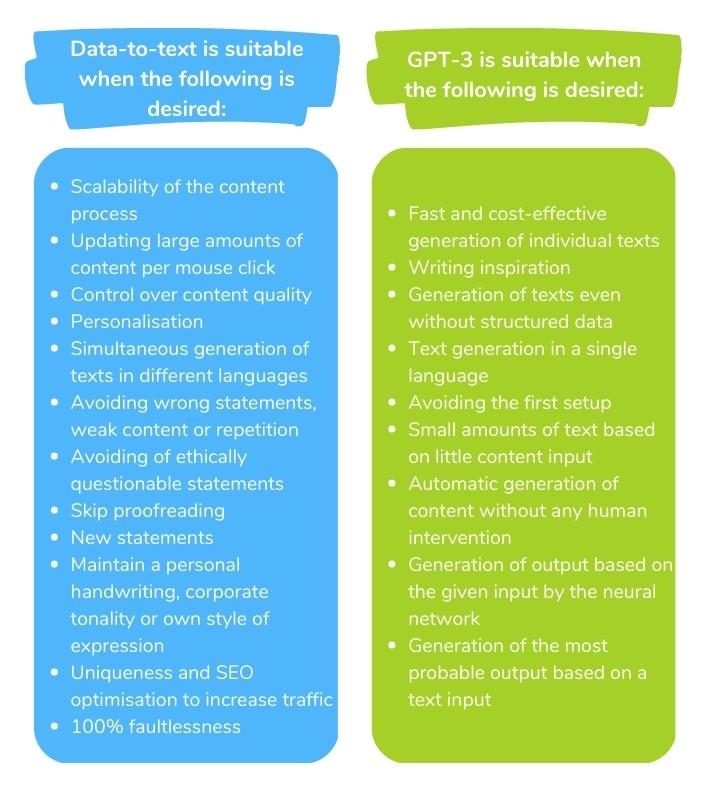

Important to know: The user has control over the text result at all times, can intervene in the text creation at any time and make updates or adjustments Even more: the texts become 100% of the desired tonality, style of speech and ductus. This ensures the consistency, meaningfulness and quality of the texts. With full control, the texts will sound exactly the way you want them to. The texts are also personalizable and scalable.

This means that tools based on structured data can, for example, create hundreds of texts on products with variable details in just a few moments. And since every text is produced uniquely, you always have enough content for all your output channels.

In addition, text creation in multiple languages is possible. So you can generate the same text in English, German, Italian and many other languages.

So how does GPT-3 work?

The GPT in GPT-3 stands for “Generative Pre-trained Transformer”. This is a language model that attempts to “learn” from existing text and can offer different ways to create a sentence. It has been trained with hundreds of billions of words representing a significant portion of the Internet – including the entire corpus of the English Wikipedia, countless books, and a staggeringly high number of web pages.

Unlike data-to-text, GPT-3 can only be used to generate individual texts, although this can be done quickly. However, the user has no control over the generated content. Multilingualism is still hardly possible with GPT-3 as with data-to-text. Here you can create texts in English OR German OR Italian only.

The most common myths

- The text robot is somehow a kind of artificial intelligence (AI).

- An artificial intelligence that can somehow rewrite texts.

- There is a kind of “adaptive” algorithm behind it that generates texts that get better and better in quality over time.

- There is hardly any influence on what content the AI produces and how the texts sound. The AI is not there yet.

- I have to expect erroneous statements in automatically produced texts. Such machines are not perfect.

Which technology for generating texts is suitable for which use case?

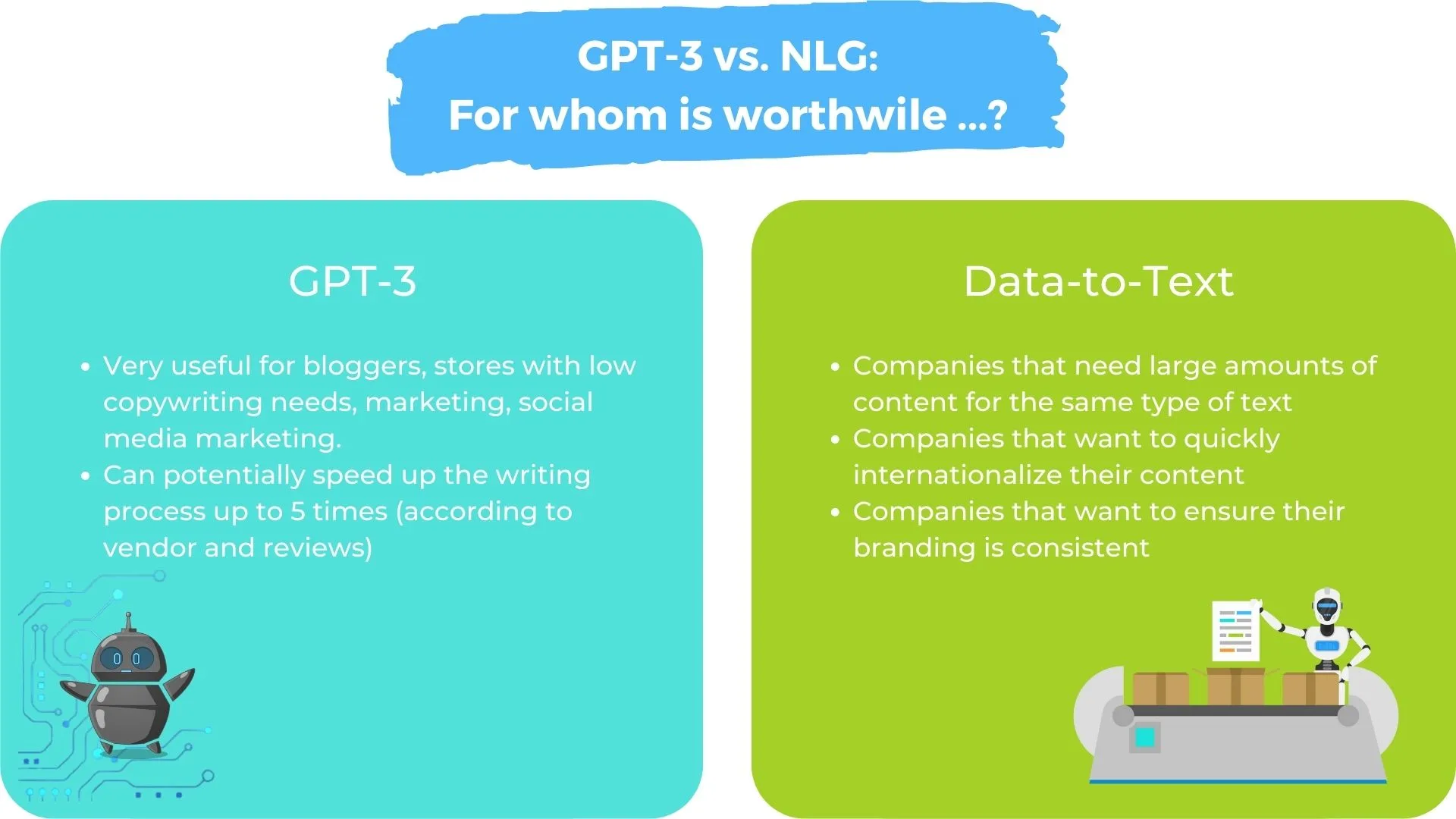

- Which technology is preferable depends on the specific application. While GPT-3 is suitable as a basis for inspiration or as a basic framework for a continuous text, e.g. a blog post, data-to-text software finds application in companies that require a large amount of texts due to its scalability.

data-to-text

Data-to-text is used, for example, in industries such as e-commerce, banking, finance, pharmaceuticals, media and publishing.

For e-commerce companies, data-to-text is profitable because it can create very high quality descriptions for many products with similar details – in different languages and with consistent quality. This saves time and money, increases SEO visibility and conversion rates on product pages.

Manually writing large amounts of text, such as thousands of product descriptions for an online store, is almost impossible. Especially when these texts need to be revised regularly to keep them up to date. For example, due to seasonal influences.

Data-to-text software is very well suited for this use case. Because once the project is set up, only the data needs to be updated. Then the existing text will be updated immediately or new and unique texts will be created immediately. This relief gives copywriters and editors more time for creative activities and conceptual work.

For pharmaceutical and financial companies, for example, the software is interesting because texts such as reports, analyses, etc. can be created automatically from the data or statistics.

GPT-3

The GPT-3 tools can be especially helpful in brainstorming and as a source of inspiration. How valuable a text result is and how much it needs to be edited afterwards usually depends on the topic. The more specific the topic, the more vague and pointless the content seems, as users report in this Reddit thread.

This is because GPT-3 has no real intelligence or general knowledge. The technology can only retrieve existing content from data sources and render it in a text. It cannot evaluate, check for accuracy, or filter the statements. Therefore, the content may not make sense or may even contain foul language and make ethically questionable statements.

Nevertheless, the use of GPT-3 may well be worthwhile. For example, for a basic outline of the text that is then revised or when the copywriter is struggling with writer’s block and wants to be inspired by the text.

Even if a large text is to be generated automatically on the basis of a small input, the use of GPT-3 can be quite useful. Or in situations where it is not efficient or practical to have humans create the text output. One example of this is the use of a chatbot to answer recurring customer inquiries.

In the following example, a GPT-3 tool was given the first set. The rest was generated by the software. A text was requested about possible activities on hot days. The statement of the generated text clearly differs from the original statement:

GPT-3 can both rewrite and continue an input text, as in this example. The model analyzed the input and predicted how the text was most likely to proceed based on a text predictor. So the hot summer day turns into a day when everyone has to get up at 5 a.m. and clouds and rain roll in. It quickly becomes clear that the desired sentences about possible activities on a hot day cannot be generated in this way. It is therefore obvious that the resulting text can only serve as a basis for inspiration.

Advantages and disadvantages of GPT-3 and data-to-text

Of course, both technologies have their strengths and weaknesses. Both generate texts automatically, but each is suitable for a different use case.

- Data-to-text is based on structured data in machine-readable form. Storytelling, as well as writing blog posts or social media posts, is thus left to humans. As a basis for text creation, GPT-3 is a viable alternative here. This is because they cannot be meaningfully generated with data-to-text software. Blog posts in particular usually deal with changing topics with completely different characteristics and features. The number of blog posts is also relatively small and disproportionate to the one-time set-up effort, which can be quite extensive.

While data-to-text is oriented to user reality through the input of data, GPT-3 is a neural network solution that generates speech from text and has no direct reference to the real world. This means that the texts will inevitably have to be post-edited in order to ensure a certain quality, but above all a meaningfulness of the texts.

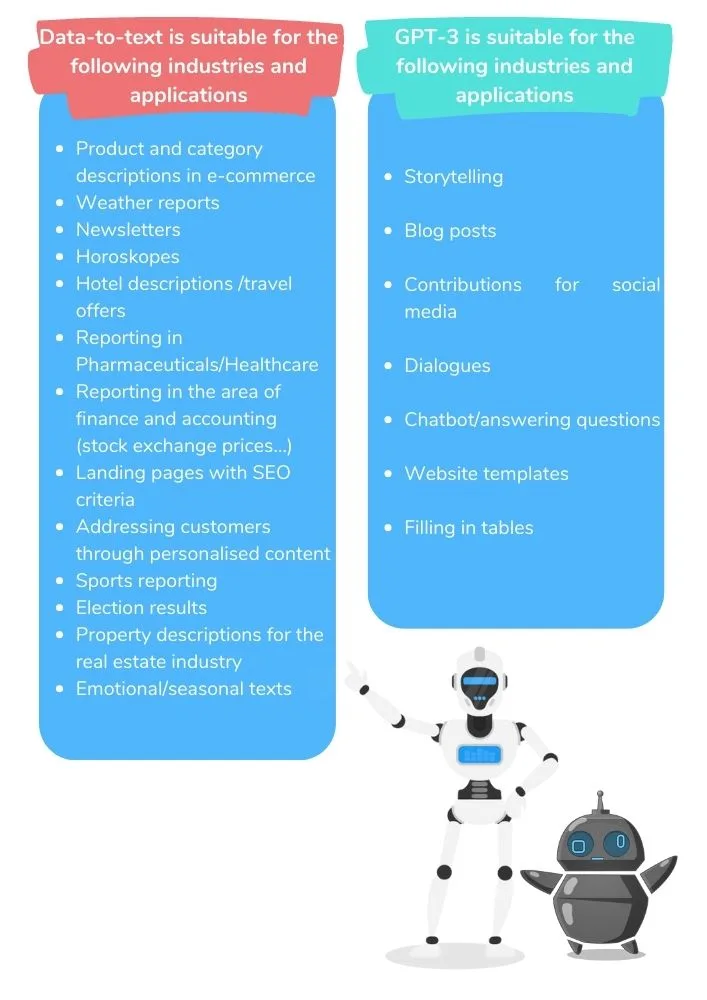

Taking into account that the data-to-text option is always suitable when large amounts of similar content with variable details are to be generated based on structured data sets, we have drawn up the following comparison:

If you consider that the content in GPT-3 does not arise from a data context,

it becomes clear which system is best suited for each industry and application:

In general, if special features that stand out from the crowd need to be emphasised and highlighted over thousands of texts, then data-to-text is recommended.

If, on the other hand, it is not efficient or feasible to have the text created by a human, and you do not mind repetitions, especially in longer texts,instead of adding additional information,then you should use GPT-3. This is also the case if there is no capacity for proofreading and fact-checking .

Conclusion on text quality and scalability

Can GPT-3 write texts? The answer is “yes”, but you must always be aware of the goal you are pursuing, the demands you have on your texts and how much effort you are willing to invest yourself! Always keep in mind that GPT-3 is “fed” with the whole internet and then delivers the most likely output. In fact, it is the recognition of a pattern that has already been perceived. This is where we see the limitations of this system, because it cannot “understand” and evaluate.

Although the words used are generated without errors and the grammar rules are applied correctly, the meaning of the statements is often lost. This means that GPT-3 can generate texts, but the result can be of inferior quality that hardly any time can be saved by revising the text afterwards. In addition, the users cannot feed the GPT-3 programme with knowledge, for example in the form of data, in order to improve it. In many cases, however, this is a decisive prerequisite for use. This is where data-to-text comes in, because it is fed with structured data and generates text based on it. However, the model is dependent on data quality and the setup of an initial project is more time consuming.

![]() Book an Online Demo

Book an Online Demo